I let an AI badly classify what I am according to its neural network (and you can too)

While billionaire techpreneurs like Elon Musk and Bill Gates feel that cutting edge artificial intelligence might herald the end to humanity when left unchecked, it’s important to remember that today’s AI is more dumb than dangerous.

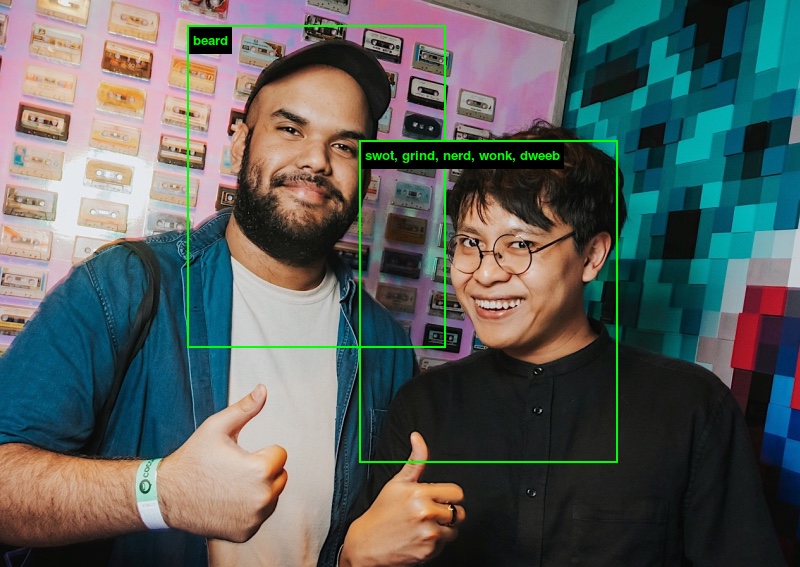

I mean sure, AI has defeated humans in chess, Go, StarCraft II, and Quake III Arena — but it's rubbish when it comes to identifying people. You can see how this plays out with ImageNet Roulette, an AI-driven image classifier that will try (and fail spectacularly) to figure out what kind of person you are. Be it a biographer, a psycholinguist, a dweeb, or a myopic person.

I should know. I got all of those labels.

The brainchild of AI researcher Kate Crawford and artist Trevor Paglen, ImageNet Roulette is a web tool crafted as part of the ongoing Training Humans art exhibition at the Fondazione Prada Osservertario museum in Milan. It’s also a statement on how technical systems can go wrong when neural networks are trained on problematic training data — so much so that ImageNet Roulette can even categorise people as spree killers and first offenders.

Or in the case of a friend who got his selfie analysed, a “rape suspect”. Me, I got off relatively lightly with the AI determining that I was a nerd — which ain’t wrong, really.

Both Paglen and Crawford acknowledge that their web tool will result in a number of “problematic, offensive and bizarre” designations, with potentially misogynistic and racist terminology used.

[embed]https://twitter.com/katecrawford/status/1173666732923396098[/embed]

According to the creators, the web tool classifies people based on ImageNet, a neural network consisting of over 14 million images scraped from the internet which have been organised into more than 20,000 categories. While it’s mostly used by researchers as an object recognizer, Paglen and Crawford wanted to see what would happen if they trained an AI model exclusively on the network’s “Person” category, of which there are 2,833 sub-categories.

“ImageNet classifies people into a huge range of types including race, nationality, profession, economic status, behaviour, character, and even morality,” noted the creators of ImageNet Roulette.

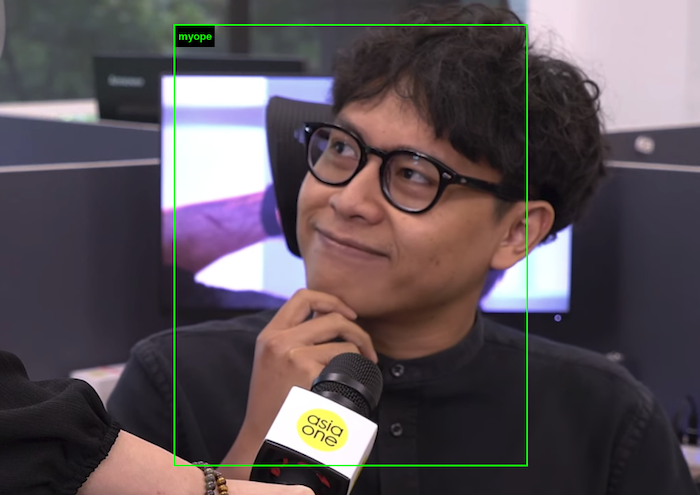

And as I found out after running a couple of self-portraits on the software, the AI is not the best evaluator when it comes to figuring out who or what you are. So far, it correctly identified me as a “myope”, which is fairly obvious with the glasses. The recognition worked even when uploading screengrabs from videos.

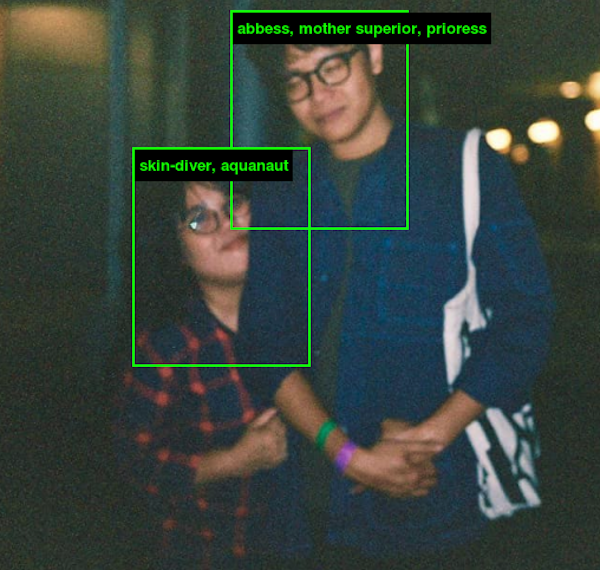

Grainy pics won’t work that well, like this photo of me and the missus that was shot on film.

Other times, the AI completely wonks out and seems to tag any label that’s vaguely associated with anyone who wears glasses. And that includes me identified as a grandmother.

You can see how other folks are having fun with it on Twitter, where some really hilarious results are getting shared.

[embed]https://twitter.com/vickyzmr/status/1173672528679751680[/embed]

[embed]https://twitter.com/taniajacob/status/1173676272851017730[/embed]

[embed]https://twitter.com/zlorine/status/1173689321481785344[/embed]

[embed]https://twitter.com/petetrainor/status/1174267888716386305[/embed]

[embed]https://twitter.com/_kumaaa/status/1174041368643211264[/embed]

As fun and addictive as it is, what can we learn from all this? "AI classifications of people are rarely made visible to the people being classified," said ImageNet Roulette's creators.

"ImageNet Roulette provides a glimpse into that process — and shows the ways things can go wrong."

[embed]https://twitter.com/katecrawford/status/1173668824329203712[/embed]

ilyas@asiaone.com